On 7 June 2023, an event was hosted by KPMG to celebrate 50 years of Compact, in Amstelveen. Over 120 participants gathered to explore the challenges and opportunities surrounding Digital Trust. Together with Alexander Klöpping, journalist and tech entrepreneur, the event provided four interactive workshops, focusing on each topic of ESG, AI Algorithms, Digital Trust, and upcoming EU Data Acts, giving participants of various industries and organizations insights and take-aways for dealing with their digital challenges.

Introduction

As Compact celebrated its fiftieth anniversary, the technology environment had experienced technology evolutions that people could never have imagined fifty years ago. Despite countless possibilities, the question of trust and data privacy has become more critical than ever. As ChatGPT represents a significant advancement in “understanding” and generating human-like texts and programming code, you would never be able to predict what could be possible by AI Algorithms in the next fifty years. We need to take actions on ethical considerations or controversies. With rapidly advancing technologies, how can people or organizations expect to protect their own interest or privacy in terms of Digital Trust?

Together with Alexander Klöpping, journalist and tech entrepreneur, the participants had an opportunity to embark on a journey to evaluate the past, improve the present and learn how to embrace the future of Digital Trust.

In this event recap, we will guide you through the event and workshop topics to share important take-aways from ESG, AI Algorithms, Digital Trust, and upcoming EU Data Acts workshops.

Foreseeing the Future of Digital Trust

Soon a personally written article like this could become a rare occasion as most texts might be AI-generated. That’s one of the predictions of the AI development shared by Alexander Klöpping, during his session “Future of Digital Trust”. Over the past few years, generative AI has experienced significant advancements which led to the revolutionary opportunities in creating and processing text, image, code, and other types of data. However, such rapid development is – besides all kinds of innovative opportunities – also associated with high risks when it comes to the reliability of AI-generated outputs and the security of sensitive data. Although there are many guardrails around Digital Trust which need to be put in place before we can adopt AI-generated outputs, Alexander’s talk suggested the possible advanced future of Artificial General Intelligence (AGI) which can learn, think, and output like humans with human-level intelligence.

Digital Trust is a crucial topic for the short-term future becoming a recurring theme in all areas from Sustainability to upcoming EU regulations on data, platforms and AI. Anticipated challenges and best practices were discussed during the interactive workshops with more than a hundred participants including C-level Management, Board members and Senior Management.

Workshop “Are you already in control of your ESG data?”

Together with KPMG speakers, the guest speaker Jurian Duijvestijn, Finance Director Sustainability of FrieslandCampina shared their exciting ESG journey in preparation of the Corporate Sustainability Reporting Directive (CSRD).

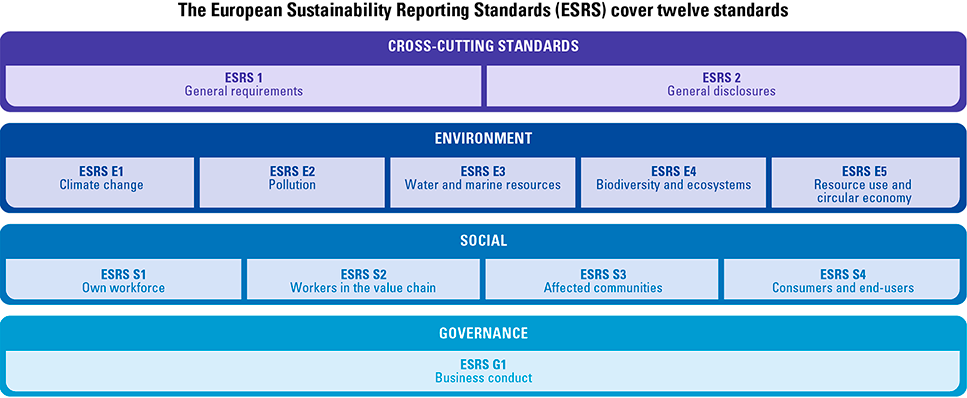

Sustainability reporting is moving from a scattered EU landscape to new mandatory European reporting standards. As shown in Figure 1, the European Sustainability Reporting Standards (ESRS) ) consists of twelve standards including ten topical standards for Environment, Social and Governance areas.

Figure 1. CSRD Standards. [Click on the image for a larger image]

CSRD requires companies to report on the impact of corporate activities on the environment and society, as well as the financial impact of sustainability matters on the company, consequently resulting in including an extensive amount of financial and non-financial metrics. The CSRD implementation will take place in phases, starting with the large companies already covered by the Non-Financial Reporting Directive and continuing with other large companies (FY25), SMEs (FY26) and non-EU parent companies (FY28). The required changes to the corporate reporting should be rapidly implemented to ensure a timely compliance, as the companies in scope of the first phase must already publish the reports in 2025 based on 2024 data. The integration of sustainability at all levels of the organization is essential for a smooth transition. As pointed out by the KPMG speakers, Vera Moll, Maurice op het Veld and Eelco Lambers, a sustainability framework should be incorporated in all critical business decisions, going beyond the corporate reporting and transforming business operations.

The interactive breakout activities confirmed that sustainability reporting adoption is a challenging task for many organizations due to the new KPIs, changes to calculation methodologies, low ESG data quality and tooling not fit for purpose. In line with the topic of the Compact celebration, the development of the required data flows depends on a trustworthy network of suppliers and development of strategic partnerships at the early stage of adoption.

CSRD is a reporting framework that could be used by companies to shape their strategy to become sustainable at all organizational and process levels. Most companies have already started to prepare for CSRD reporting, but anticipate a challenging project internally (data accessibility & quality) and externally (supply chains). While a lot of effort is required to ensure the timely readiness, the transition period also provides a unique opportunity to measure organizational performance from an ESG perspective and to transform in order to ensure that sustainability becomes an integral part of their brand story.

Workshop “Can your organization apply data analytics and AI safely and ethically?”

The quick rise of ChatGPT has sparked a major change. Every organization now needs to figure out how AI fits in, where it’s useful, and how to use it well. But using AI also brings up some major questions, for example in the field of AI ethics. Like, how much should you tell your customers if you used ChatGPT to help write a contract?

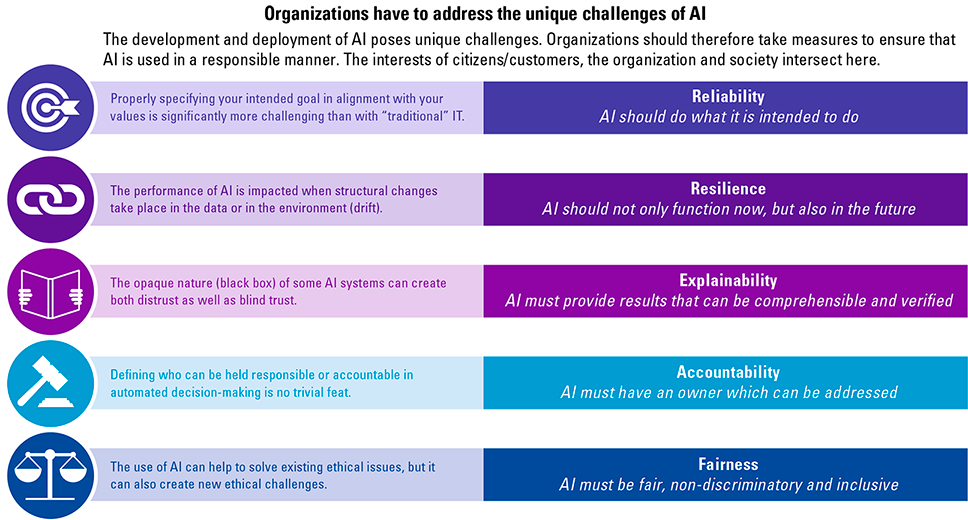

During the Responsible AI workshop, facilitators Marc van Meel and Frank van Praat, both from the KPMG’s Responsible AI unit, presented real-life examples that illustrate the challenges encountered when implementing AI. They introduced five important principles in which ethics dilemmas can surface: the Reliability, Resilience, Explainability, Accountability, and Fairness of AI systems (see Figure 2). Following the introduction of these principles and their subsequent elaborations, the workshop participants engaged in animated discussions, exploring a number of benefits and drawbacks associated with AI.

Figure 2. Unique challenges of AI. [Click on the image for a larger image]

To quantify those challenges of AI, there are three axis organizations can use: Complexity, Autonomy, and Impact (see Figure 3).

Figure 3. Three axis of quantifying AI risks. [Click on the image for a larger image]

Because ChatGPT was quite new when the workshop took place (and still is today), it was top of mind for everyone in the session. One issue that received substantial attention was how ChatGPT might affect privacy and company-sensitive information. It’s like being caught between two sides: on the one hand, you want to use this powerful technology and give your staff the freedom to use it too. On the other hand, you have to adhere to privacy rules and make sure your important company data remains confidential.

The discussion concluded stressing the importance of the so-called “human in the loop”, meaning it’s crucial that employees understand the risks of AI systems such as ChatGPT when using it and that some level of human intervention should be mandatory. Actually, it automatically led to another dilemma to consider, namely how to find the right balance between humans and machines (e.g. AI). Basically, everyone agreed that it depends on the specific AI context on how humans and AI should work together. One thing was clear; the challenges with AI are not just about the technology itself. The rules (e.g. privacy laws) and practical aspects (what is the AI actually doing) also matter significantly when we talk about AI and ethics.

There are upsides as well as downsides when working with AI. How do you deal with privacy-related documents that are uploaded to a (public) cloud platform with a Large-Language Model? What if you create a PowerPoint presentation from ChatGPT and decided not to tell your recipient/audience? There are many ethical dilemmas, such as lack of transparency of AI tools, discrimination due to misuses of AI, or Generative AI-specific concerns, such as intellectual property infringements.

However, ethical dilemmas are not the sole considerations. As shown in Figure 4, practical and legal considerations can also give rise to dilemmas in various ways.

Figure 4. Dilemmas in AI: balancing efficiency, compliance, and ethics. [Click on the image for a larger image]

The KPMG experts and participants agreed that it would be impossible just to block the use of this type of technology, but that it would be better to prepare employees, for instance by providing privacy training and use critical thinking to use Generative AI in a responsible manner. The key is to consider what type of AI provides added value/benefit as well as the associated cost of control.

After addressing the dilemmas, the workshop leaders concluded with some final questions and thoughts about responsible AI. People were interested in the biggest risks tied to AI, which match the five principles that were talked about earlier (see Figure 3). But the key lesson from the workshop was a bit different – using AI indeed involves balancing achievements and challenges, but opportunities should have priority over risks.

Workshop “How to achieve Digital Trust in practice?”

This workshop was based on KPMG’s recent work with the World Economic Forum (WEF) on Digital Trust and was presented by Professor Lam Kwok Yan (Executive Director, National Centre for Research in Digital Trust of the Nanyang Technological University, Singapore), Caroline Louveaux (Chief Privacy Officer of Mastercard) and Augustinus Mohn (KPMG). The workshop provided the background and elements of Digital Trust, trust technologies, and digital trust in practice followed by group discussions.

Figure 5. Framework for Digital Trust ([WEF22]). [Click on the image for a larger image]

The WEF Digital Trust decision-making framework can boost trust in the digital economy by enabling decision-makers to apply so-called Trust Technologies in practice. Organizations are expected to consider security, reliability, accountability, oversight, and the ethical and responsible use of technology. A group of major private and public sector organizations around the WEF (incl. Mastercard) is planning to operationalize the framework in order to achieve Digital Trust (see also [Mohn23]).

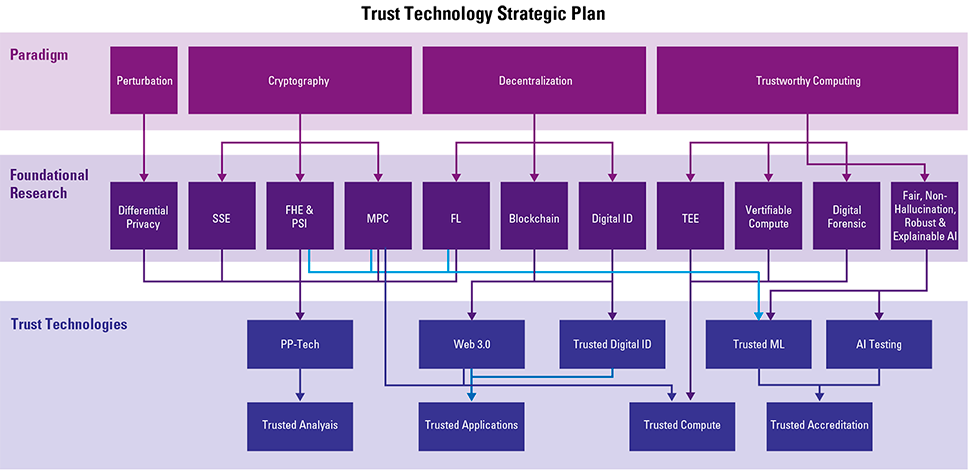

Professor Lam introduced how Singapore has been working to advance the scientific research capabilities of Trust Technology. In Singapore, the government saw the importance of Digital Trust and funded $50 million for the Digital Trust Centre, the national center of research in trust technology. While digitalization of the economy is important, data protection comes as an immediate concern. Concerns on distrust are creating opportunities in developing Trust Technologies. Trust Technology is not only aiming to identify which technologies can be used to enhance people’s trust, but also to define concrete functionality implementable for areas shown in Figures 6 and 7 as presented during the workshop.

Figure 6. Areas of opportunity in Trust Technology (source: Professor Lam Kwok Yan). [Click on the image for a larger image]

Figure 7. Examples of types of Trust Technologies (source: Professor Lam Kwok Yan). [Click on the image for a larger image]

Presentation by Professor Lam Kwok Yan (Nanyang Technological University), Helena Koning (Mastercard) and Augstinus Mohn (KPMG). [Click on the image for a larger image]

Helena Koning from Mastercard shared how Digital Trust is put in practice at Mastercard. One example was data analytics for fraud prevention. While designing this technology, Mastercard needed to consider several aspects in terms of Digital Trust. To accomplish designing AI-based technology, they made sure to apply a privacy guideline, performed “biased testing” for data accuracy, and addressed the auditability and transparency of AI tools. Another example was to help society with anonymized data while complying with data protection. When there were many refugees from Ukraine, Poland needed to analyze how many Ukrainians were currently in Warsaw. Mastercard supported this quest by anonymizing and analyzing the data. These could not have been achieved without suitable Trust Technologies.

In the discussion at the end of the workshop, further use cases for Trust Technology were discussed. Many of the participants had questions on how to utilize (personal) data while securing privacy. In many cases, technology cannot always solve such a problem entirely, therefore, policies and/or processes also need to be reviewed and addressed. For example, in the case of pandemic modeling for healthcare organizations, they enabled the modeling without using actual data to comply with privacy legislation. In another advertising case, cross-platform data analysis was enabled to satisfy customers, but the solution ensured that the data was not shared among competitors. The workshop also addressed that it is important to perform content labeling to detect original data and prevent fake information from spreading.

For organizations, it is important to build Digital Trust by identifying suitable technologies and ensuring good governance of the chosen technologies to realize their potential for themselves and society.

Workshop “How to anticipate upcoming EU Data regulations?”

KPMG specialists Manon van Rietschoten (IT Assurance & Advisory), Peter Kits (Tech Law) and Alette Horjus (Tech Law) discussed the upcoming data-related EU regulations. An interactive workshop explored the impact of upcoming EU Digital Single Market regulations on business processes, systems and controls.

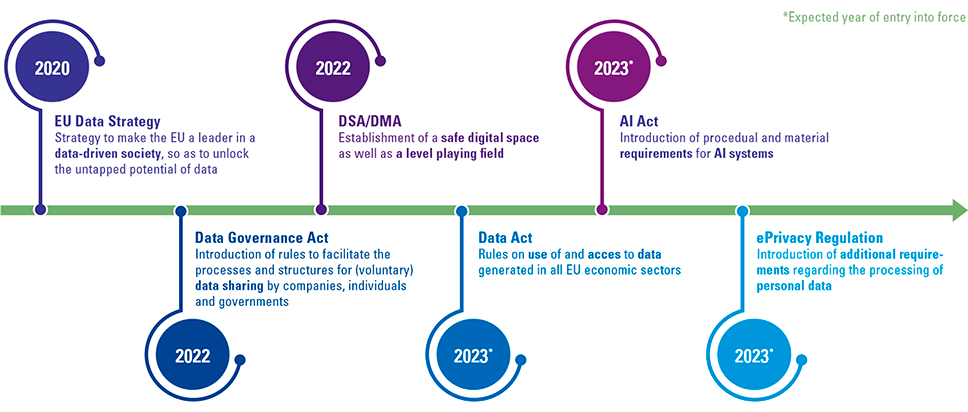

The EU Data Strategy was introduced in 2020 to unlock the potential of data and establish a single European data-driven society. Using the principles of the Treaty on the Functioning of the European Union (TFEU), the Charter of Fundamental Rights of the EU (CFREU) and the General Data Protection Regulation (GDPR), the EU Data Strategy encompasses several key initiatives that collectively work towards achieving its overarching goals. Such initiatives include entering into partnerships, investing in infrastructure and education and increased regulatory oversight resulting in new EU laws and regulations pertaining to data. During the workshop, there was focus on the latter and the following regulations were highlighted:

- The Data Act

- The Data Governance Act

- The ePrivacy Regulation

- The Digital Market Act

- The Digital Services Act and

- The AI Act.

Figure 8. Formation of the EU Data Economy. [Click on the image for a larger image]

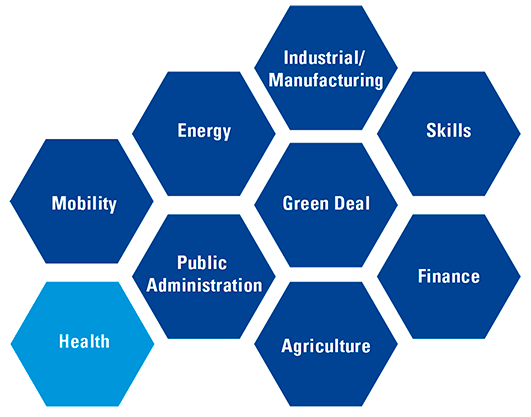

During the workshop participants also explored the innovative concept of EU data spaces. A data space, in the context of the EU Data Strategy, refers to a virtual environment or ecosystem that is designed to facilitate the sharing, exchange, and utilization of data within a specific industry such as healthcare, mobility, finance and agriculture. It is essentially a framework that brings together various stakeholders, including businesses, research institutions, governments, and other relevant entities, to collaborate and share data for mutual benefit while ensuring compliance with key regulations such as the GDPR.

The first EU Data Space – European Health Data Space (EHDS) – is expected to be operable in 2025. The impact of the introduction of the EU Data Spaces is significant and should not be underestimated – each Data Space has a separate regulation for sharing and using data.

Figure 9. European Data Spaces. [Click on the image for a larger image]

The changes required by organizations to ensure compliance with the new regulations pose a great challenge, but will create data-driven opportunities and stimulate data sharing. This workshop provided a platform for stakeholders to delve into the intricacies of newly introduced regulations and discuss the potential impact on data sharing, cross-sector collaboration, and innovation. There was ample discussion scrutinizing how the EU Data Strategy and the resulting regulations could and will reshape the data landscape, foster responsible AI, and bolster international data partnerships while safeguarding individual privacy and security.

Key questions posed by the workshop participants were the necessity of trust and the availability of technical standards in order to substantiate the requirements of the Data Act. In combination with the regulatory pressure, the anticipated challenges create a risk for companies to become compliant on paper (only). The discussions confirmed that trust is essential as security and privacy concerns were also voiced by the participants: “If data is out in the open, how does we inspire trust? Companies are already looking into ways not to have to share their data.”

In conclusion, the adoption of new digital EU Acts is an inevitable but interesting endeavor; however, companies should also focus on the opportunities. The new regulations require a change in vision, a strong partnership between organizations and a solid Risk & Control program.

In the next Compact edition, the workshop facilitators will dive deeper into the upcoming EU Acts.

Conclusion

The workshop sessions were followed by a panel discussion between the workshop leaders. The audience united in the view that the adoption of the latest developments in the area of Digital Trust require a significant effort from organizations. To embrace the opportunities, they need to keep an open mind while being proactive in mitigating the risks that may arise with technology advancements.

The successful event was concluded with a warm “thank you” to the three previous Editor-in-Chiefs of Compact who oversaw the magazine for half a century, highlighting how far Compact has come. Starting as an internal publication in the early seventies, Compact has become a leading magazine covering IT strategy, innovation, auditing, security/privacy/compliance and (digital) transformation topics with the ambition to continue for another fifty years.

Maurice op het Veld (ESG), Marc van Meel (AI), Augustinus Mohn (Digital Trust) and Manon van Rietschoten (EU Data Acts). [Click on the image for a larger image]

Editors-in-Chief (from left to right): Hans Donkers, Ronald Koorn and Dries Neisingh (Dick Steeman not included). [Click on the image for a larger image]

References

[Mohn23] Mohn, A. & Zielstra, A. (2023). A global framework for digital trust: KPMG and World Economic Forum team up to strengthen digital trust globally. Compact 2023(1). Retrieved from: https://www.compact.nl/en/articles/a-global-framework-for-digital-trust-kpmg-and-world-economic-forum-team-up-to-strengthen-digital-trust-globally/

[WEF22] World Economic Forum (2022). Earning Digital Trust: Decision-Making for Trustworthy Technologies. Retrieved from: https://www.weforum.org/reports/earning-digital-trust-decision-making-for-trustworthy-technologies/